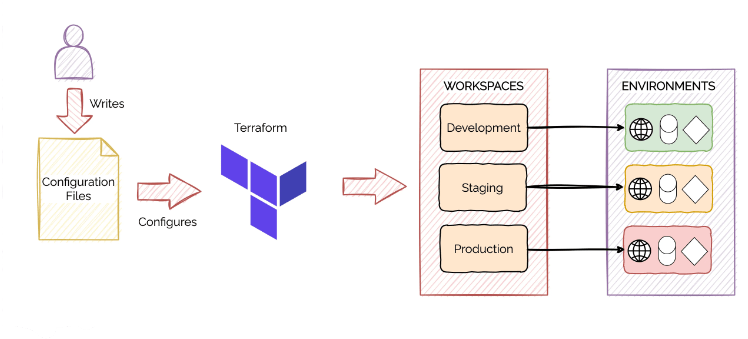

What is Terraform Workspace?

Workspaces in Terraform are simply independently managed state files. A workspace contains everything that Terraform needs to manage a given collection of infrastructure, and separate Workspaces function like completely separate working directories. We can manage multiple environments with Workspaces.

Let's say you have a piece of terraform code and you want to create a resource in the dev, staging and production environment. Usually, you have three separate pieces of code and three different terraform modules. But with Terraform workspace you don't have to do that. You can have the same code just pass in some new variables and boom you are set there.

Workspaces allow you to separate your state and infrastructure without changing anything in your code when you wanted the same code base to deploy to multiple environments without overlap. i.e. Workspaces help to create multiple state files for a set of the same terraform configuration files.

Each of the environments required separate state files to avoid a collision. With the use of workspaces, you can prepend the workspace name to the path of the state file, ensuring each workspace (environment) had its state.

Remote Execution

Terraform Provisioners can be used to model specific actions on the local machine or a remote machine to prepare servers or other infrastructure objects for service, like installing a 3rd-party application after the creation of virtual machines.

There are mainly two types of Provisioner:

local-exec Provisioner: The local-exec provisioner invokes a local executable after a resource is created. This invokes a process on the machine running Terraform, not on the resource.

remote-exec Provisioner: The remote-exec provisioner invokes a script on a remote resource after it is created. This can be used to run a configuration management tool, bootstrap into a cluster, etc.

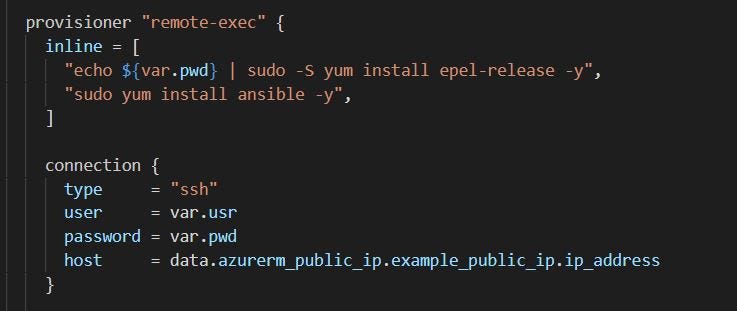

Now let’s look into the remote-exec provisioner, it contains the type of the provisioner and a connection block which talks about how the connection to the VM will be made (like SSH, WinRM etc..)

In the above code you can see the provisioner used is “remote-exec”, it will connect the VM through SSH protocol using the username and password defined as variables in the TF code and it will SSH the target VM using the Public IP address fetched in the data source section of azurerm_public_ip block. As soon as the connection gets established it will install the epel-release repo and then it installs Ansible.

Now, We can run Terraform apply to start building the resource in Azure.

Collaboration in Terraform

Team collaboration is critical in software development. However, while team collaboration increases both development speed and quality, it may lead to some challenges when it comes to automation. Terraform uses a local backend that stores the file on your local machine. While this setting works fine for small teams of one or two developers, as the team’s size increases, you might be facing the following challenges:

Shared storage: To update your infrastructure via Terraform, each team member needs access to the same Terraform state file.

Locking: Without locking, two team people might run the command

terraform applysimultaneously, leading to conflicts, data loss, and state file corruption.Secret: All data in terraform state are stored in plain text.

For this reason, while it is a good practice to store your Terraform configuration files on a Version Control System, it’s discouraged to use it for your state file. So, the question is, if not on Git, where should we keep the state file?

To make collaboration easier, Terraform offers built-in support for remote backends. Remote backends are nothing more than a remote location. Terraform core will pull the latest state before applying changes to the target infrastructure and automatically push the newest version when the provision is completed. Most of the remote backends support both locking and encryption in transit and at the rest of the state file, which will prevent ‘unwanted eyes’ from obtaining them. Examples of remote backends are AWS S3 Bucket, Azure Storage, and Google Cloud storage.

Terraform Best Practices

Let’s talk about some of the best practices that should be followed while using Terraform.

i)Structuring

--When you are working on a large production infrastructure project using Terraform, you must follow a proper directory structure to take care of the complexities that may occur in the project. It would be best if you had separate directories for different purposes.

For example, if you are using Terraform in development, staging, and production environments, have separate directories for each of them.

terraform_project/ ├── dev │ ├── main.tf │ ├── outputs.tf │ └── variables.tf ├── modules │ ├── ec2 │ │ ├── ec2.tf │ │ └── main.tf │ └── vpc │ ├── main.tf │ └── vpc.tf ├── prod │ ├── main.tf │ ├── outputs.tf │ └── variables.tf └── stg ├── main.tf ├── outputs.tf └── variables.tf 6 directories, 13 filesEven the terraform configurations should be separate because, after a period, the configurations of a growing infrastructure will become complex.

For example – you can write all your terraform codes (modules, resources, variables, outputs) inside the

main.tffile itself, but having separate terraform codes for variables and outputs makes it more readable and easy to understand.ii)Latest Version

Terraform development community is very active, and the release of new functionalities happens frequently. It is recommended to stay on the latest version of Terraform when a new major release happens. You can easily upgrade to the latest version.

If you skip multiple major releases, upgrading will become very complex.

Run

terraform -vcommand to check for a new update.

iii)Automate your Deployment with a CI / CD Pipeline

Terraform automates several operations on its own. More specifically, it generates, modifies, and versions your cloud and on-prem resources. Using Terraform in the Continuous Integration and Continuous Delivery/Deployment( CI/CD) pipeline can improve your organization’s performance and assure consistent deployments, despite the fact that many teams use it locally.

Running Terraform locally implies that all dependencies are in place: Terraform is installed and available on the local machine, and providers are kept in the .terraform directory. This is not the case when you move to stateless pipelines. One of the most frequent solutions is to use a Docker image with a Terraform binary.

Terraform provides official Docker containers that can be used. In case you are changing the CI/CD server, you can easily pass the infrastructure inside a container.

Before deploying infrastructure on the production environment, you can also test the infrastructure on the docker containers, which are very easy to deploy. By combining Terraform and Docker, you get portable, reusable, repeatable infrastructure.

iv)Lock State File

There can be multiple scenarios where more than one developer tries to run the terraform configuration at the same time. This can lead to the corruption of the terraform state file or even data loss. The locking mechanism helps to prevent such scenarios. It makes sure that at a time, only one person is running the terraform configurations, and there is no conflict.

Here is an example of locking the state file, which is at a remote location using DynamoDB.

resource “aws_dynamodb_table” “terraform_state_lock” { name = “terraform-locking” read_capacity = 3 write_capacity = 3 hash_key = “LockingID” attribute { name = “LockingID” type = “S” } } terraform { backend “s3” { bucket = “s3-terraform-bucket” key = “vpc/terraform.tfstate” region = “us-east-2” dynamodb_table = “terraform-locking” } }When multiple users try to access the state file, the DynamoDB database name and primary key will be used for state locking and maintaining consistency.

Terraform Cloud

Terraform Cloud is an application that offers a centralized platform to teams that use Terraform to provision infrastructure. It is a cloud infrastructure management tool that allows users to easily create and remotely manage their infrastructure consistently and efficiently.

--Terraform Cloud vs. Terraform Enterprise

Another option Hashicorp offers for Terraform Cloud is its Enterprise solution. The main difference is that Terraform Enterprise is self-hosted and offered as a private installation rather than a SaaS solution. Terraform Enterprise offers most of the same features as the Terraform Business tier.

What is the Terraform registry?

The Terraform Registry is an interactive resource for discovering a wide selection of integrations (providers), configuration packages (modules), and security rules (policies) for use with Terraform. The Registry includes solutions developed by HashiCorp, third-party vendors, and our Terraform community.

Github Link:

https://github.com/SudipaDas/TerraWeek.git

If this post was helpful, please follow and click the 💚 button below to show your support.

_ Thank you for reading!

_Sudipa